1. Introduction

1.1 What is Entity Resolution?

Entity Resolution (ER) is the process of identifying and linking records across datasets that refer to the same real-world entity - whether it's a person, business, address, or other object.

In practice, this means answering questions like:

- Are “Alice Johnson” and “A. Johnson” in different datasets the same person?

- Do “Tech Solutions LLC” and “Tech Solutions Limited” refer to the same company?

- Are two differently formatted addresses actually the same physical location?

Done well, entity resolution creates cleaner, more trustworthy data, which forms the necessary backbone for better analytics, signal extraction and customer experiences.

1.2 Why it matters

Messy, non-deduplicated data is often a significant blocker for downstream decision-making and automation. Entity resolution help you:

- Eliminate duplicates that inflate company headcount or lead to redundant outreach

- Join records across sources where there’s no shared unique identifier

- Enrich data with external information while avoiding mismatches

- Build accurate customer or company profiles that support sales, marketing, investing and recruiting.

Whether you’re resolving records from multiple sources, enriching internal data or standardizing business information across systems, entity resolution is a critical (and often underestimated) process.

1.3 Who this guide is for

This guide is designed for data practitioners and technical teams ingesting and/or joining large-scale datasets (and oftentimes more than one). Whether you’re just starting to explore entity resolution or are looking for ways to scale your approach, this guide is for you.

The intended audience includes:

- Data engineers designing ETL pipelines or matching logic

- Analysts validating deduplication and other data quality metrics

- Technical onboarding teams supporting customer integrations

- Product and operations teams responsible for profile accuracy and scale

In this guide, we’ll walk through both foundational techniques as well as more advanced strategies with clear examples and implementation notes throughout.

2. Building an Entity Resolution Pipeline

Entity resolution isn’t a one-off task - it’s an ongoing process. An ER pipeline helps you operationalize everything from cleaning and matching to outputting usable, deduplicated data.

2.1 Pipeline Overview

A strong entity resolution pipeline typically includes the following stages

- Ingestion: Bring in raw data from various sources (i.e. CRM, external data providers, APIs, etc)

- Cleaning & Preprocessing: Deduplicate, fix typos and initial processing to ensure field consistency

- Standardization and Parsing: Apply common formats to fields like phone, addresses, names, etc and decompose compound fields into subcomponents for better matching (e.g. splitting full names)

- Matching Logic: Use a mix of rule-based strategies, machine learning and external references to decide which records are match candidates

- Resolution Decision: Decide which matching records to merge, flag or hold for manual review (including exact matches, probable matches, uncertain/outlier cases)

- Output & Integration: Resolve matches and load into your downstream systems (e.g. data warehouse, CRM, etc)

2.2 Tips for Scalability and Maintenance

- Modularize each pipeline stage so it can be reused across datasets or projects

- Log your match decisions (especially for fuzzy/ML-based matches). This can make auditing and future improvements much easier to implement

- Version your rules and models, and monitor metrics like match rate, false positives, and unresolved records

- Include manual review paths for low-confidence matches, especially early in development, but even at scale.

Over the remainder of this document, we’ll explore each of the core pipeline stages in detail - so let’s dive in!

3. Foundation: Preparing Your Data

Before any sophisticated matching can happen, your data needs to be in shape. Preparing for entity resolution starts with cleaning, standardizing and parsing your records so that downstream processes work on reliable inputs.

3.1 Data Cleaning and Preprocessing

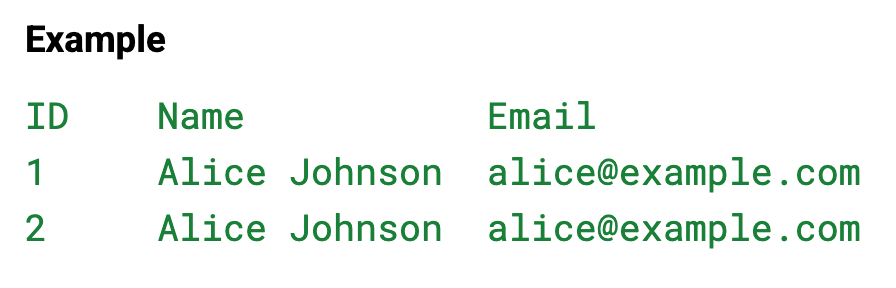

Removing Duplicates

Duplicate records are one of the most common data quality issues. Even exact copies can sneak into a dataset due to repeated imports, form resubmissions or batch errors.

Solution

- Identify duplicates based on stable fields (e.g. email, phone number, or ID)

- Use SQL queries, data cleaning libraries (like pandas.drop_duplicates()) or deduplication tools to remove simple duplicates

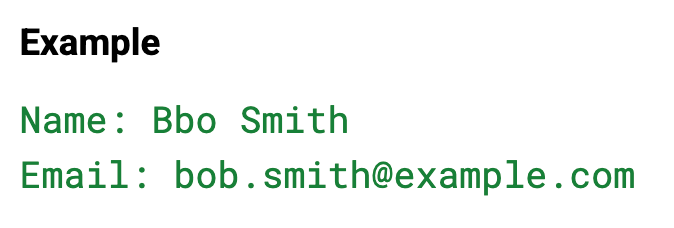

Correcting Typos and Errors

Simple misspellings can derail matching logic, especially for names and email addresses.

Solution

- Apply spell-check tools or fuzzy matching to catch and correct errors

- In high-sensitivity cases, add a manual review step to ensure accuracy

3.2 Data Standardization and Normalization

Standardization ensures that data across records follows the same format, which is crucial for reliable comparisons.

Phone Numbers and Addresses

Phone numbers can appear in dozens of formats. Likewise, addresses may use different abbreviations, casing or country codes.

Solution

- Convert phone numbers to a standard format like E.164 (+11234567890)

- Normalize addresses using an API (e.g. Google Maps, USPS, OpenStreetMap)

Naming and Case Consistency

Normalize names, titles, and other text fields for consistent comparisons.

Tips

- Convert to lowercase or title case

- Remove extra whitespace or punctuation

- Use consistent abbreviations (e.g. “St.” vs “Street”)

3.3 Parsing and Decomposition

Breaking complex fields into structured components gives you more to work with when matching.

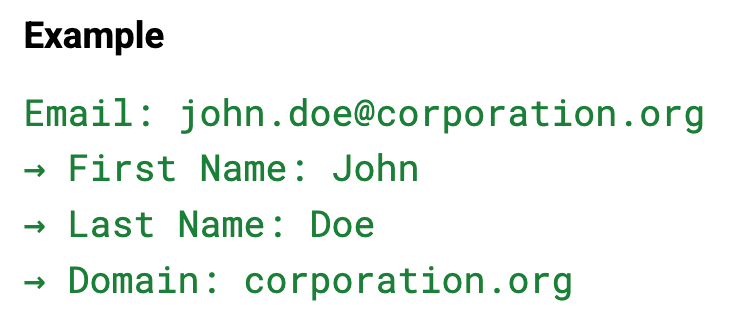

Email Parsing

From an email address, you can often infer name and organization details

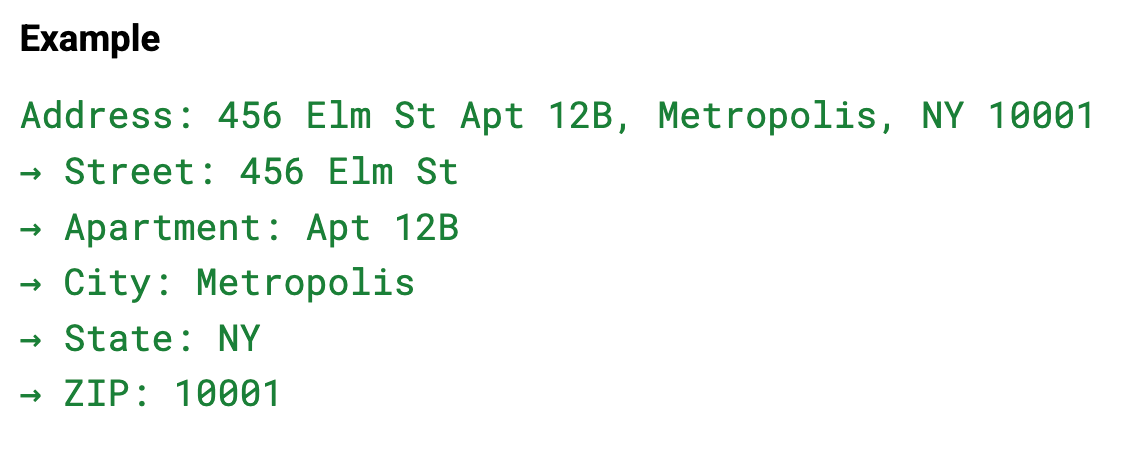

Address Parsing

Addresses often need to be decomposed into street, city, state, and zip/postal code.

Implementation

- Use regular expressions or address parsing libraries (like useaddress, libpostal or commercial APIs) to split emails and addresses into their constituent parts

- Always test against real-world data to catch and handle edge cases

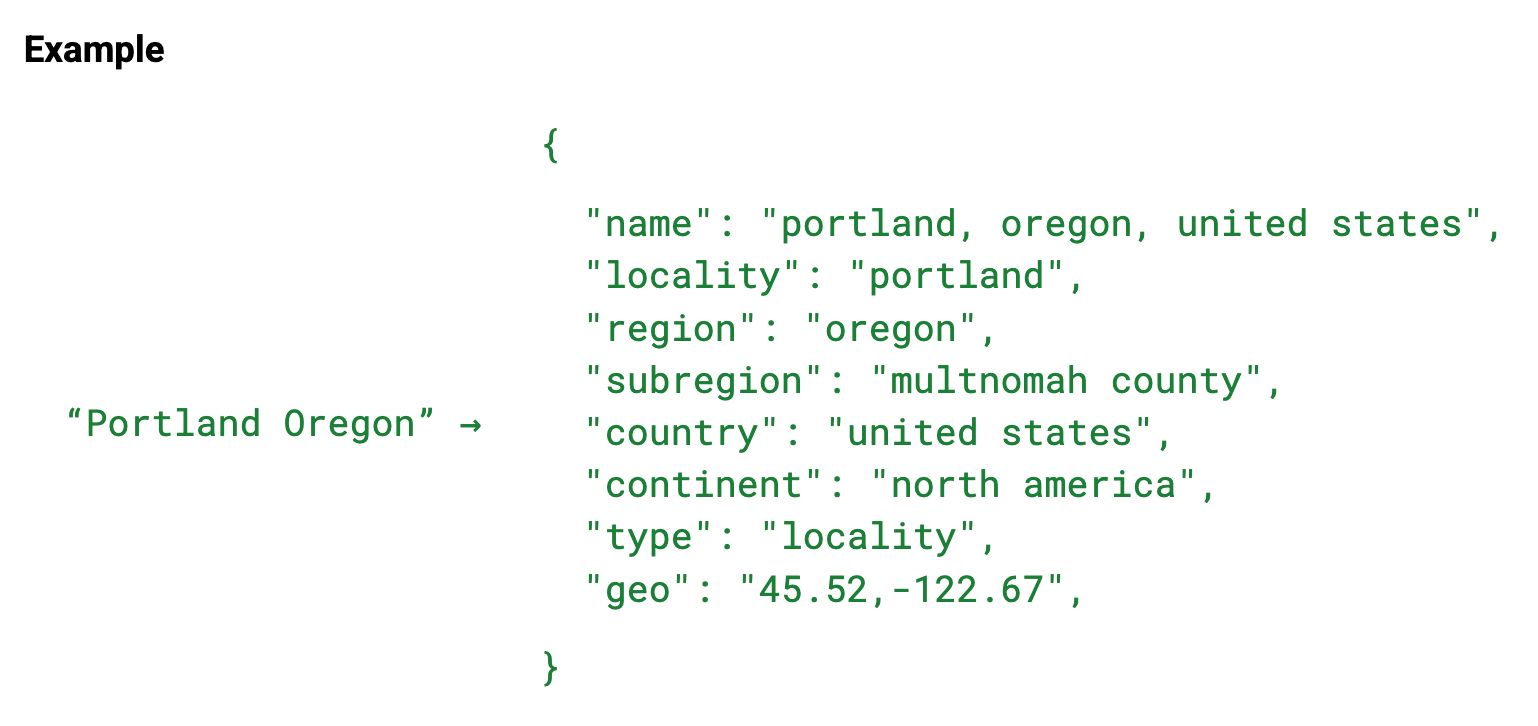

3.4 (Bonus) PDL Cleaner APIs

It shouldn’t come to you as a surprise that we at PDL have various data cleaning and standardization systems in place for our own entity resolution pipelines. But you may not know that we offer free public access to the same cleaning and standardization systems we use in production via our Cleaner APIs.

We offer 3 Cleaner APIs specifically for cleaning:

- Locations

- Companies

- Schools

Each of these endpoints takes in a raw string and returns cleaned, structured and canonicalized JSON structures representing the resolved entity.

We highly encourage you to try these APIs out when working through your data standardization processes. It not only helps you shortcut your development process, but also enables you to match your data to PDL’s right out of the box.

You can find more about the Cleaner APIs on our documentation pages:

Cleaner APIsBy investing in these foundational steps, you will dramatically improve the effectiveness of the matching and entity resolution techniques we’ll cover in the following sections.

Next, we’ll explore rule-based strategies for connecting records across datasets.

4 Rule-Based Matching Techniques

Once your data is clean and structured, the next step is to compare records using deterministic rules. Rule-based matching doesn’t rely on training data or machine learning - it’s transparent, tunable and often highly effective for known patterns and business logic.

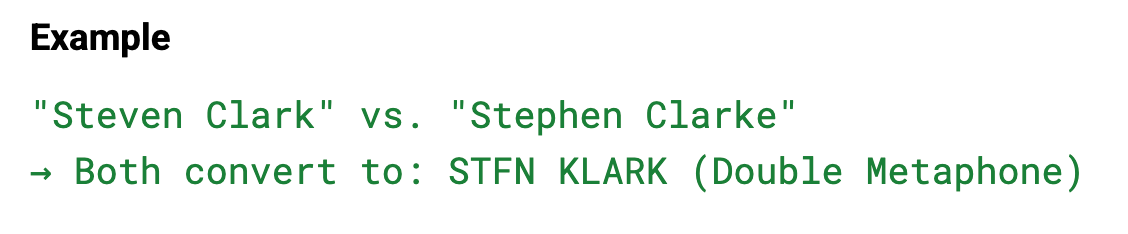

4.1 Phonetic Algorithms

Phonetic algorithms help catch similar-sounding names that may be spelled differently.

Common Techniques

- Double Metaphone: A relatively accurate phonetic-coding algorithm that works well across names and languages

- Soundex: A simpler phonetic-coding technique, but that is more limited than the Double Metaphone technique.

Use When

- Comparing names in lead or customer data

- Handling regional spelling variations

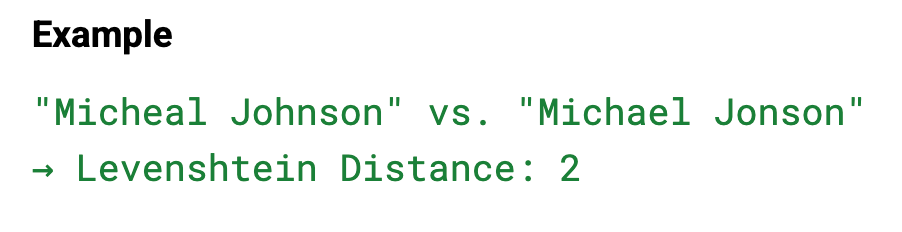

4.2 Fuzzy Matching

Fuzzy matching calculates similarity between strings to help identify values that are “close enough”.

Key Techniques

- Levenshtein Distance: A string similarity metric that scores using the edit distance between two strings (insertions, deletions, substitutions)

- Jaro-Winkler: Another string similarity metric that prioritizes the beginning-of-string similarity

Implementation Tips

- Set a similarity threshold (e.g. match if similarity score > 90%)

- Log matches near the threshold for manual review to ensure the threshold is appropriate for your needs

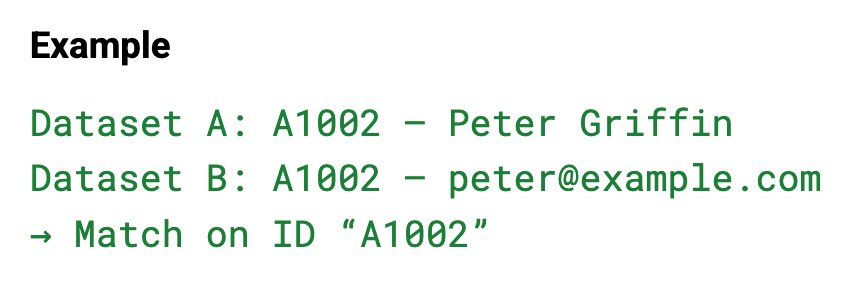

4.3 Unique Identifiers

When present, unique IDs are the fastest and most accurate way to link records.

Best Practices

- Use stable identifiers like CRM IDs, PDL profile IDs or hashed emails

- For missing IDs, you can infer matches through consistent fields like email or phone (but take care as overly lax rules here can lead to incorrect matching errors)

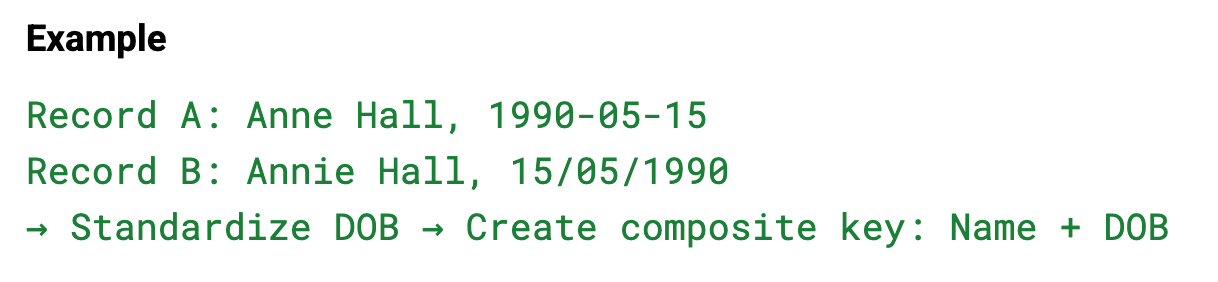

4.4 Composite Keys and Cross-Field Matching Strategies

When no single field is a reliable identifier on its own, you can use a combination of fields instead to create composite keys.

Tips

- Normalize fields before combining (e.g. date formats, name casing, etc)

- Use composite keys for joins when dealing with partial or inconsistent identifiers

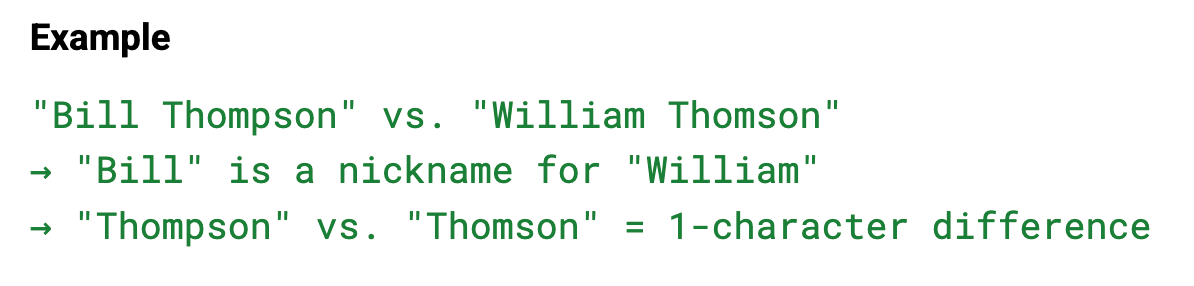

4.5 Handling Nicknames and Variations

Nicknames and common misspellings can cause mismatches in otherwise clear cases.

Tips

- Use a nickname dictionary (e.g. Bill → William, Liz → Elizabeth)

- Apply fuzzy matching to catch minor variations or typos

- Consider phonetic encoding to as a secondary check

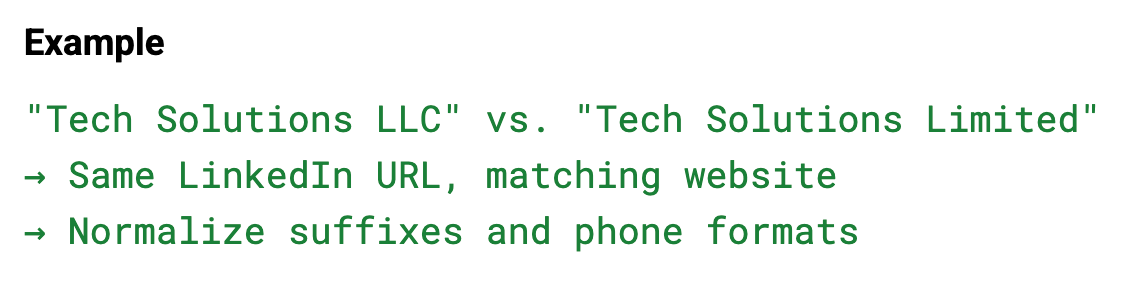

4.6 Company-Specific Rules

Company data brings its own matching challenges - legal suffixes, vanity domains, and contact details all play a role.

Common Business Rules

- Normalize suffixes: treat “Inc.”, “LLC”, and “Ltd.” as equivalent

- Standardize URLs (strip “www”, lowercase domains)

- Compare social links (e.g. LinkedIn company pages, etc)

- Normalize phone numbers (e.g. strip non-numeric characters)

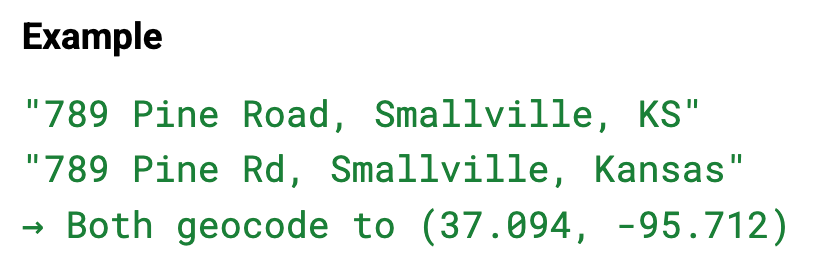

4.7 Geocoding and Location-Based Matching

Addresses can vary dramatically in format - but geographic coordinates are consistent, making them a powerful way to match records more accurately.

Using Latitude/Longitude for Address Comparison

Steps

- Use a geocoding service (e.g. Google Maps or OpenStreetMap) to convert addresses to lat/lon coordinates

- Compare the resulting coordinates using distance thresholds to define an acceptable margin of error (e.g. 5 miles).

Rule-based techniques are the backbone of many real-world entity resolution pipelines (including PDL’s). They’re transparent, fast and adaptable to domain knowledge.

In the next section, we’ll explore how to enhance these methods with some more advanced techniques.

5. Machine Learning Approaches

Rule-based systems are powerful, but have limits - especially when dealing with ambiguous records or large datasets with subtle inconsistencies. This is where machine learning can help by improving precision and recall at scale.

5.1 Supervised Learning

There are a wide range of Machine Learning (ML) techniques that can be applied to an entity resolution pipeline. In this section, we’ll cover a traditional supervised learning approach for probabilistic matching, which provides an easy entry point into this class of techniques.

Probabilistic Matching

Instead of hard rules, machine learning lets you work with likelihoods. Any classifier trained with the approach above will take in a set of similarity features and learn to output a probabilistic matching score that you can use to set thresholds or triage results.

Some of the key benefits of using probabilistic matching are:

- Handles noisy or incomplete data more robustly

- Can continue to improve with and learn with better data

- Tunable precision/recall depending on use case

Example Workflow

Here’s an example of what an ML-based workflow could look like:

- Label a set of record pairs as “Match” or “No Match”

- Extract similarity features for each pair of records

- Split your dataset into a training set and test set

- Train a model using the training set data

- Evaluate the model’s performance using the test dataset

Feature Engineering

The first step in ML-based entity resolution is transforming raw data into features that reflect similarity.

Some examples of such features could include:

- Name similarity score (e.g. Levenshtein Distance)

- Email exact match (binary 0 or 1)

- Address similarity score (e.g. Jaccard index or fuzzy match score)

- Phone number match (binary 0 or 1)

- Domain match from email or website

These collections of features become the input variables for the model, and should be generated in a consistent manner for each record in the training dataset as well as the test set.

Training Labels

For the training process, you will also need to create ground truth training labels by assigning pairs of records as “match” (1) or “no match” (0) based on prior knowledge.

Training Data vs Test Data

Next, you should divide your dataset up into a training set and a test set. Take care to ensure that the distribution of matches vs no matches is roughly similar across both datasets, and in particular ensure that no data from your test set is used in the training process.

Training a Classifier

Once the features are created, labels are assigned and the data is split, you can train a classifier model to learn what combinations of signals suggest a match. Model training should be done on only the training dataset. The test set should only be used for benchmarking the fully trained model after the training process has completed.

There are a wide range of machine learning models that can be used for classification ranging from simple decision trees to multi-layered neural networks. Some common and robust approaches to explore (especially when starting out) include:

- Random Forests

- XGBoost

- Linear Regression

Implementation Tips

- Balance your training and test data to include edge cases

- Track false positives and false negatives to adjust thresholds accordingly

- Explore leveraging feature importance outputs to refine or simplify your model

This section covers a simple example of how to apply a supervised classifier to your entity resolution pipeline, but there is an extremely wide range of additional machine learning approaches and techniques that can also be deployed. However, even this relatively simple solution allows you to begin tackling more complex entity resolution challenges and scale your approach confidently.

Because of how complimentary ML-based approaches are with rule-based strategies, we recommend applying the techniques from this section on top of the rule-based approaches from the previous section.

6. Conclusion

Entity resolution is a foundational process for any team working with large, messy, or multi-source datasets. By systematically cleaning, standardizing, and matching records, you unlock more accurate analytics, stronger automation, and better decision-making abilities.

We hope this guide has helped demystify the process and given you practical tools and knowledge to build and scale your own ER pipeline.

We believe that clean, connected data isn’t just a technical goal - it’s a strategic advantage.

Your PDL Solutions Engineering Team 💜