Purpose

Welcome! We’re excited to see you testing

People Data Labs (PDL) to measure our fit into your central workflow. A well‑designed test validates

your search relevance, parameter performance, and

response quality using your own queries*. Think of this as due diligence: fine-tune your queries, confirm data coverage, and identify where filtering or post-processing may be needed.

*If you are instead looking to test our data via enrichment, you can find that guide

here.

Pre‑test Checklist

- Create an API key in the API Dashboard.

- Read through our Person Schema or Company Schema so you know what fields are searchable and filterable.

- Read through our Query Limitations so you know what query types we offer.

- Identify key test objectives: are you prioritizing record count, field fill rates, record completeness, etc.?

- Draft a few (3-5) real-world queries aligned to your common production use cases

- Double-check field paths, operators and overall syntax of your SQL or ES query.

- Set the size parameter to a smaller number (i.e., 5, 10) to manage credit usage while still giving a representative sample size

Define your success criteria for each query. (See the section on Evaluating Results for more guidance.)

Best Practices

- Check out the API Dashboard Quickstart guide to get up and running quickly

- Once you have a solid grasp of the API Dashboard, check out the API Playground to start sending your first API calls.

- You should be able to describe each use case in a single, clear sentence (“I’m looking for small (<100 employees) companies in the Bay Area who specialize in Cybersecurity”). Use that as a guiding principle for constructing your queries.

- Be sure to make full use of our canonical field values for scoping your search.

- Fields like industry, job_title_role or job_title_levels are useful for adding relevance weighting.

- Start with a small size parameter while you are iterating your query to avoid consuming unnecessary credits.

- Use the PDL-standardized full job titles like Chief Executive Officer instead of acronyms like CEO.

- Carefully evaluate your query (see below), as many questions can be resolved by understanding how our data is structured and how to search it most effectively.

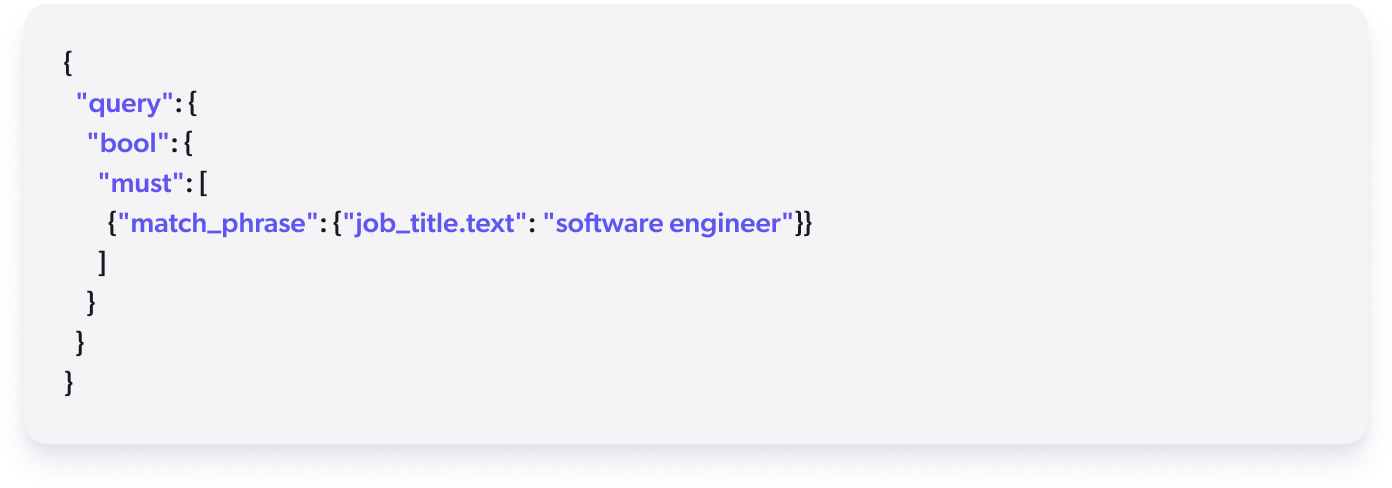

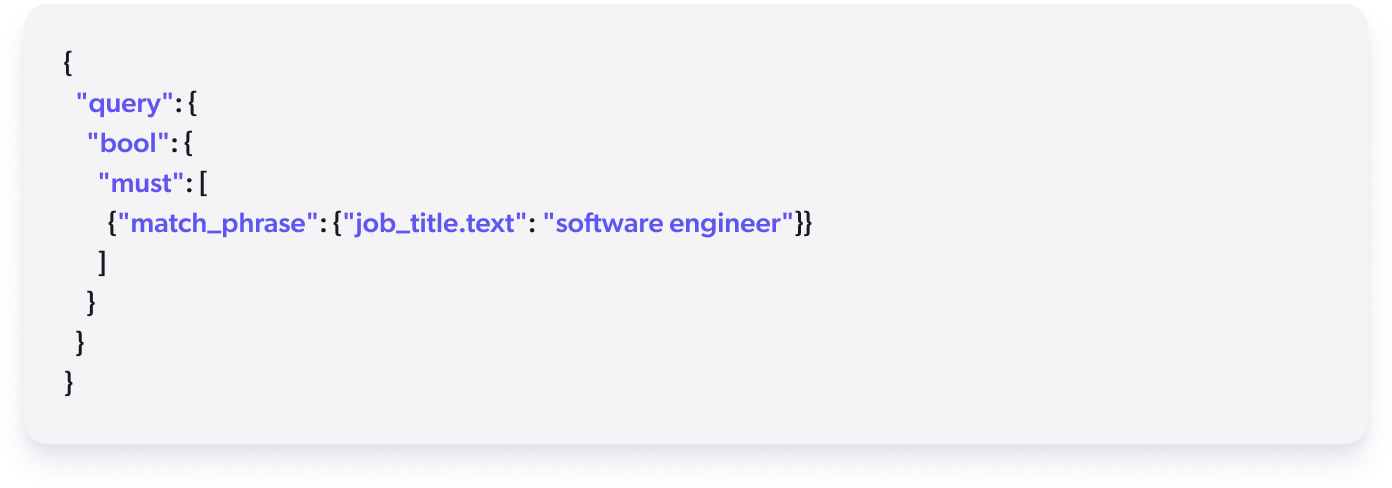

- For supported text fields, use match and match_phrase queries in Elasticsearch for more robust queries. See Elasticsearch Person & Company mappings for details

Sample Size and Query Evaluation

You may need to iterate on your query structure as you refine your understanding of how fields behave within our dataset, and this is to be expected. Even experienced Elasticsearch and SQL users often require a few test rounds to balance precision and record count in their query. Below you will find potential issue signals and paired suggestions for improving your results.

While occasional duplicate or overlapping records may appear, they typically represent valid variations (e.g., multiple sources or values). Diversity in results is best evaluated by the range of industries, roles, or geographies returned relative to your filters.

Range of Normal Count Expectations*

- Title + region OR Tag + region → Thousands of results, varying in relevance

- Role + seniority + locality OR industry + locality → Dozens to hundreds, more targeted

- Company domain + title OR Company name + size → Precision search with tight filters, few results

*Ranges are highly dependent on exact parameters.

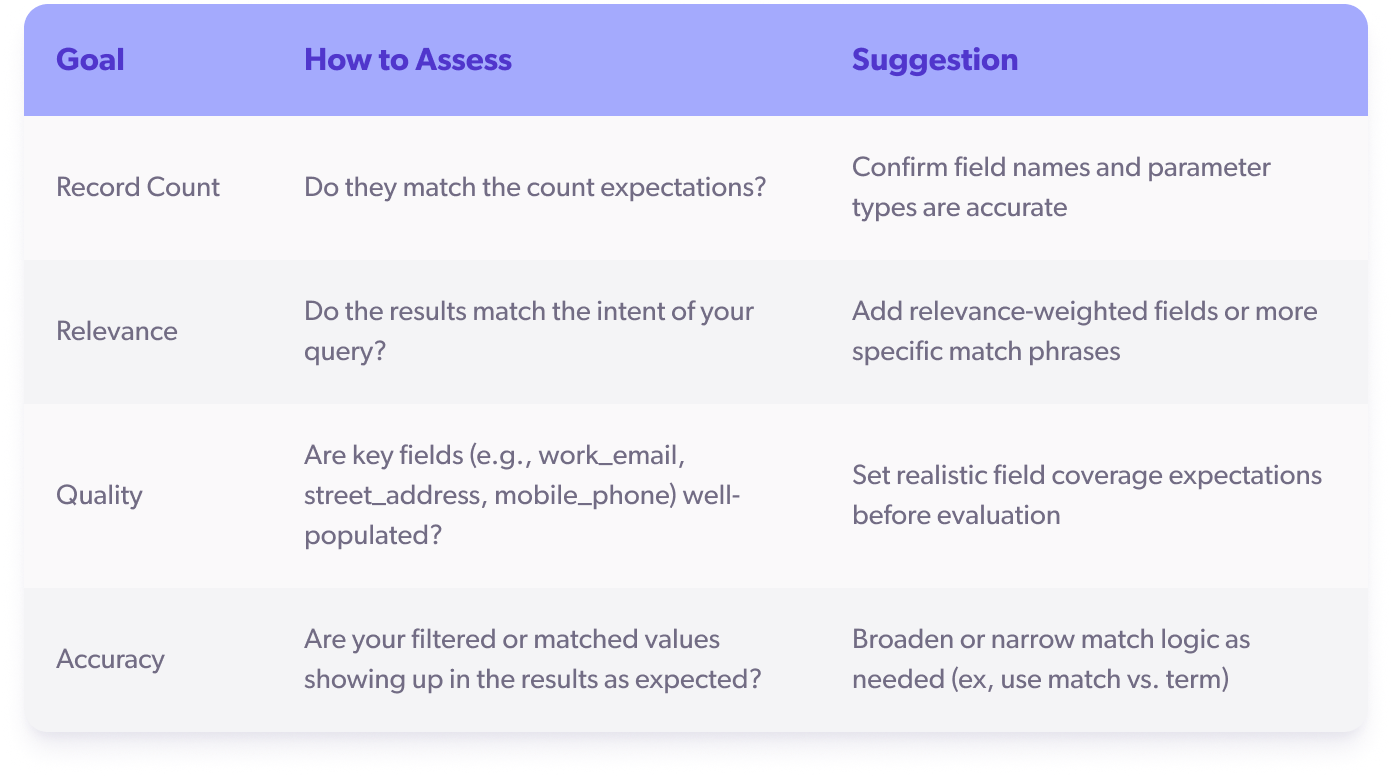

Evaluate Results

Once confident in your iterated queries, you can move on to evaluating the results it returns. Unlike enrichment, (which offers a clear pass/fail via match rate), evaluating search results is more nuanced. Exact success criteria will depend on your business use case: are you trying to populate a sales lead list, validate market presence, or power internal models?

Either way, you’ll want to be sure you have defined success criteria for this process, for each query:

- “We need at least 2,000 results”

- “At least 40% of results should include location_locality and email”

- “At least 90% should be valid decision makers”

It’s also important to understand the field fill rates across our dataset to set reasonable expectations. For example, for all

profiles with at least a LinkedIn URL, the fill rate differs across each field. If the fill rate for mobile_phone is 7.5%, expecting 80% of your matches to return a mobile_phone would be unreasonable as the data doesn’t exist for a broad portion of the records.

Ultimately, your evaluation should answer one key question: Are the results good enough to move forward with PDL?

Quick Fixes if Results Still Aren’t Ideal

- Loosen overly specific filters (e.g., adjust job seniority or industry matching for broader recall).

- Use job_title_role instead of specific job titles.

- Standardize company domains and names (acme-inc.com → acme.com).

- Remove accents or special characters from names.

- Test each match query input individually to ensure they are working as expected.

- Double-check field names match our Person or Company Schema.

- Paginate deeper — ideal results aren’t always in the top 5 or 10.

If your search still underperforms, please reach out to the Solutions Engineer you are working with or the

Support team. We would be happy to provide any query or search guidance you need.

Resources & Self‑help Paths